Every few weeks, someone sends me yet another AGI prediction piece - like this one - and I find myself repeating the same conversation. To save time (and sanity), I'm writing up a more permanent response.

tl;dr: Transformers are powerful pattern recognizers that thrive in narrow domains, but their dependence on enormous data and compute to achieve even modest abstraction suggests hard limits on the path to AGI. They'll likely be tools within AGI systems - but wont deliver AGI themselves. Without a sober reassessment of their role, and restraint around hype, we risk triggering a damaging AI winter.

There are several fundamental limitations to the claim that current transformer (TNN) based language models are on a trajectory toward artificial general intelligence (AGI), let alone super intelligence. Chief among these is the rapid onset of diminishing returns, which emerges from the reliance on encoding schemes - particularly token-based attention - that offer initial performance gains but plateau due to their representational inefficiencies. Many AGI forecasts mistakenly extrapolate an exponential trend from what is, in reality, a sigmoidal curve - an error analogous to the flawed economic and demographic projections that contributed to systemic misjudgements in global policy and finance. Furthermore, many of the relationships are modelled on a human time vs performance scale rather than compute time vs performance scale, which would show an even more linear trend, one for which we are rapidly reaching our capacity to support additional growth. There are only so many GPU-hours left to allocate to this particular bonfire.

We appear to have already passed the inflection point for performance gains in transformer architectures, and the expected asymptotic behaviour strongly suggests that under continued scaling these models will fall short of the vague and handwavy understanding of "AGI" we collectively have.

More critically, transformer-based systems excel primarily because they help us compensate for weaknesses in data encoding, not because they embody any principled alignment with the structure of generalized human cognition. Their architectural limitations - such as quadratic attention complexity, context fragmentation, and positional encoding constraints - all remain unresolved, and these are just some of the problems for which one can reasonably conceive TNN friendly solutions exist.

As it stands, scaling such models yields sublinear improvements, even in the presence of exponential increases in compute and data. This indicates a poor scaling law alignment for tasks requiring deeper forms of generalization or abstraction. The bottom line here is that transformers will always hit a wall because within current paradigms, they're not able to learn in the way a thing would need to, in order for it to develop what we would accept as AGI.

To illustrate the broader principle, consider the toy classification task shown below in figure 1, where five better understood machine learning models are tasked with solving a multi-class spiral problem using only 10 data points. The variance in performance is stark: certain models (e.g., ANN) exhibit better generalization due to structural inductive biases that are well aligned with the task geometry, while others (e.g., decision trees) fail outright due to their inherent piecewise nature.

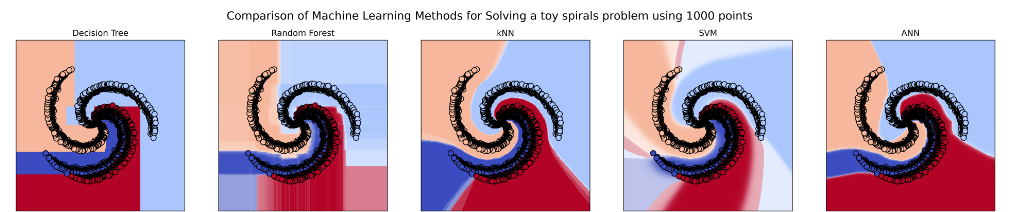

In contrast, Figure 2 demonstrates the same task with 1000 training samples. With sufficient data and a complex enough model, even poorly suited models begin to approximate a useful decision boundary. However, this brute-force success masks the underlying inefficiency: Many of these models did not "learn" in a cognitively meaningful way - it simply overfit with enough samples such that the overfit still reflects the population, this is wholly distinct from something that could learn and approximate the trend using a very limited number of examples.

This does not mean these models are fundamentally unsuitable to the spiral task, as we could easily transform the data in an appropriate way, such that other models become ideal for the task, however there will still be an ideal model, while others will at best brute force a poor approximation of the desired "comprehension".

The analogy and lesson for language models here I think is clear: performance improves with scale, but only within the bounds of the model's inductive capacity. The sheer volume of data required to get good quality answers from TNN based LLM should be all the warning one needs that these will never deliver on what companies like OpenAI promise. This is cybernetics hyper all over again, and it will damage the research community immeasurably when the various parties duped finally learn they have in fact been duped.

I love this field, and can very much see myself dedicating my life to it, but I worry that when the hype crash comes, we will see study of artificial intelligence fall into the same level of ill repute that cybernetics was unfairly plunged into by the charlatans of the time, for whom we have analogous contemporary figures. As it stands, I fear that there is a winter coming for this field.

The bottom line is that barring the emergence of a novel architecture or encoding paradigm that enables language representation in a way that is both efficient and structurally aligned with cognitive and semantic principles, current approaches are unlikely to yield AGI. While continued progress in narrow domains remain likely, the extrapolation from statistical language modelling to general intelligence is, at present, speculative and largely driven by unsustainable commercial interests rather than empirical viability with long-term payout.

fundamentally, the TNN is highly effective at recognizing and leveraging statistical and structural patterns within a series, and relating that to another series, which enables impressive results in translation tasks and beyond. However, expecting transformers to achieve human style AGI is akin to expecting a deep dense feedforward neural networks (DNN) or a convolutional neural networks (CNN) to independently reach the same. While these models excel within their specific domains, their limitations in abstract reasoning and generalization make them unlikely candidates for AGI in their current form.

My view is this: TNNs may one day help us communicate with an AGI, by helping translate. The TNNs are likely to be a component in some hypothetical AGI system, much as CNNs would help it "see" or DNNs help it "process". However, they are not the AGI themselves, and they likely never will be.

It is important to me, that it is clear to people, that I am really excited and optimistic about the TNN. I am excited about what it adds to the toolbox, and the surprising capabilities it has so far displayed. However, it is not the solution, much like early purely DNN based vision models were not a viable solutions to computer vision, at least in the task of feature extraction. TNNs display many exciting emergent properties which on the surface appear to contradict some of my claims. I am open to, accepting of, and vocal about those facts.

This post is not about disparaging the TNN, but rather it is a call for sanity and decorum around the topic.